Robotics+AI (Part 2) Development: Advanced task sensing, learning, adapting

This is Part 2 of 4 in a series on Robotics+AI :

recent developments, and the surprising realities we grapple with.

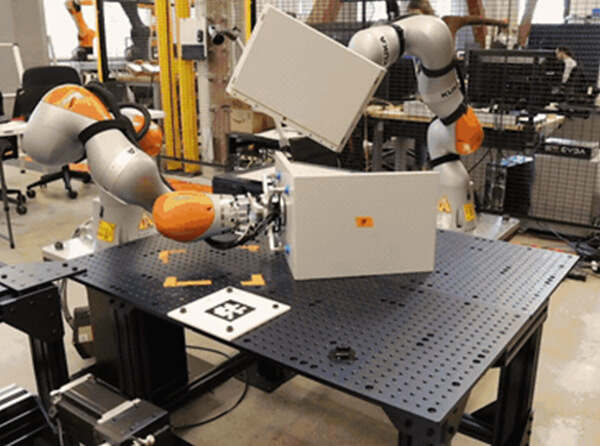

INTRINSIC (an Alphabet company) software and tools to make industrial robots easier to use, less costly, and more flexible, enabling new products, businesses and services.

Why needed

The cost and complexity to program robots for industrial applications, particularly in the complex area of sensing and adapting to the environment, with time running into hundreds of hours.

What it offers

INTRINSIC software and AI tools significantly simplifies the development of industrial robots’ ability to sense, learn and automatically make adjustments while on task, so they can be applied in a wider range of settings and applications.

Sensor data from the robot’s environment can be practicably used to “sense, learn from, and quickly adapt to the real world.”

INTRINSIC technique automation areas include: automated perception; deep learning; reinforcement learning; motion planning and simulation; force control; simulation.

Hard-coding that has taken 100’s of hours, can now reduce to 2 hours.

Why significant

Dexterous and delicate tasks, like inserting plugs or moving cords, have eluded robotics because they lack the sensors or software needed to understand their physical surroundings.

Industrial-robot development is key to advanced manufacturing, especially in the face of decreasing labor availability ( 2.3Mn short by 2030).

With the Intrinsic software and tools, developers can reimagine industrial-robots. We can take advantage of the declining cost of industrial robot hardware, and the availability of lower-cost sensors for perception and tactile skills.

What still keeps us awake at night

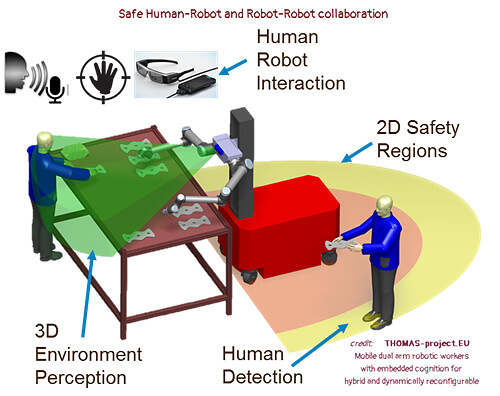

How does a robot recognize and keep safe a human worker straying into its workspace?

- Industrial robots operate in what are essentially controlled and constant environments. The difficulties with task-related variables are challenging enough, let alone truly grasp and respond to what is happening around them, outside of their learning sets.

- The current state of the art does not give robots the awareness and intelligence to work with humans, who must observe specified safety zones.

- Guidelines and regulations are still emerging along with the robots themselves. The US NIOSH is developing standards for industrial robots specifying “guarded areas” to maintain safe distance from humans. For Europe, the THOMAS.EU project offers guidelines.

- NIOSH categorises robots as: (1) industrial; (2) professional and personal service; (3) collaborative robots. “Collaborative” involves working alongside humans. (See NIOSH)

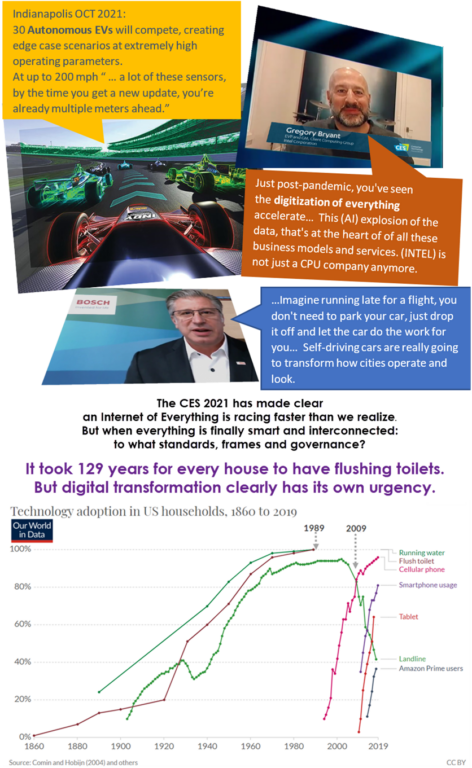

What can we learn from autonomous-vehicle development issues?

- Tesla in particular has contended with the US FTC (consumer protection) and the NHTSA. Certainly, drivers/owners signify their acceptance of risks spelled out by Tesla. The recent question is about the danger for people outside the vehicle.

- Currently, the US NTSA and watchdog groups are scrutinizing Tesla’s “Traffic Aware Cruise Control” and the 11 reported incidents when autonomous vehicles crashed into parked first-responder vehicles

- Machine vision is nowhere as adaptive as human vision: “…vehicles are designed to ignore stationary objects when traveling at more than 40 mph so they don’t slam on the brakes when approaching overpasses or other stationary objects on the side of the road, such as a car stopped on the shoulder.”

(CNN/Business) - China’s State Administration for Market Regulation triggered a recall of 285,000 Teslas, citing that the cruise control system could be activated inadvertently causing sudden acceleration.

- Tesla does instruct drivers that “current Autopilot features require active driver supervision and do not make the vehicle autonomous.”

- Furthermore… design and execution aspects are one area– and marketing claims and substantive product claims are yet another. Can “autopilot” be construed as autonomous piloting? US senators have asked the FTC to review Tesla’s statements about its Autopilot and Full Self-Driving Systems’ claimed autonomous capabilities.

- No doubt, promotional and labeling claims will be increasingly scrutinized with this cumulation of incidents.

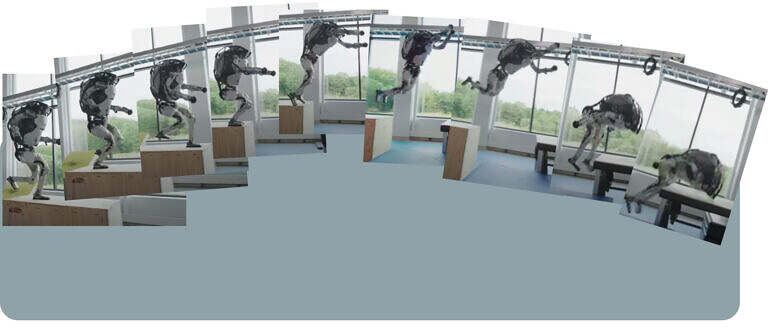

- The US Society of Automotive Engineers (SAE) defines 6 levels of autonomy. In that context, Tesla is at Level 2 (Partial Automation). Many analysts expect further advances still face years of development.

- The autonomous vehicle case could be much farther down the road if the environmental and interactive variabilities are reduced with standardized environments, signals and communications etc. (Hint: AI idealizes human intelligence… and we humans have governing structures and limits – but even those do not eliminate accidents and rogue actions.)

- Let’s start with a frank and explicit self-appraisal of where we are with AI’s abilities to process and plan in dynamic environments.